The Scene Grammar Lab is interested in a wide variety of aspects in visual cognition, particularly visual attention and

visual memory during scene perception. The lab’s core research areas therefore include top-down guidance in scene search, neural representation and development of scene knowledge, as well as

action-perception interactions in real-world scenarios. We use a variety of methodologies in the lab, including psychophysics, gaze-contingent and real-world eye-tracking, pupillometry, as well

as EEG recordings.

Our visual world is complex, but its composition usually follows a set of rather strict rules. Knowledge of these rules... >>continue>>

Global and local scene features as well as prior knowledge of where objects are most probably found in scenes seem to enable us to rapidly narrow down... >>continue>>

Even though our memory of the visual world is not a one-to-one imprint of what meets our eyes, we seem to be able to detect small changes in... >>continue>>

We are not born knowing the rules of our world. How do we acquire scene grammar and does this relate to language acquisition? We have kids search for our lab sheep "Wolle", who loves hiding in scenes!

How much of what we have learned from laboratory experiments is actually valid when we actively interact with our visual world?

Fixation-Related Potentials during Scene Perception

What processes are dominant in your brain while freely viewing specific parts of a naturalistic scene? By combining EEG with eye tracking we want to find out more...

>>continue>>

Active Vision in Immersive Virtual Reality Environments

Decades of screen-based 2D paradigms in cognitive psychology have provided us with an abundance of insights into the human mind. How do these hold up in naturalistic, navigable 3D environments with realistic task constraints?

Eye-Tracking

Your gaze tells a lot about what is going on in your head: about how you react to what you see and how you choose to accomplish a task.

In particular, at the SGL we are interested in how you look while looking for objects...

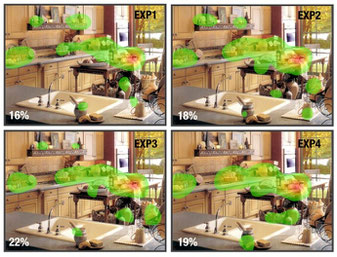

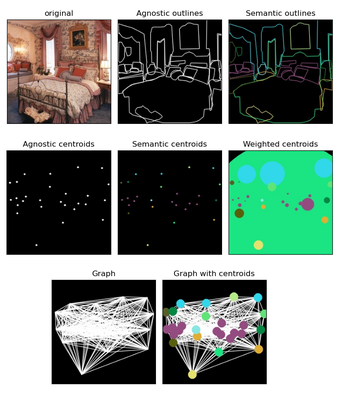

Visual search modelling

Modelling cognitive behaviors is a method that is often used to predict how someone would behave, but also to test one's hypotheses and construct theories by comparing the results of a model to the true behaviors observed in a human study.

Both goals are extremely interesting and can potentially yield great results that have many applications.

For example, at the SGL, we are working on a model of visual search that predicts a temporal sequence of gaze positions... >>continue>>